Understanding Yahoo Finance Data Access

Accessing financial data from Yahoo Finance offers valuable insights for investors and analysts. However, understanding the available methods and their limitations is crucial for successful data retrieval. This section explores the primary approaches to accessing Yahoo Finance data, comparing their strengths and weaknesses.

Yahoo Finance Data Access Methods

Yahoo Finance primarily provides data through its publicly accessible website. Two main methods exist for extracting this information: utilizing unofficial APIs (since Yahoo doesn’t officially provide a comprehensive, fully supported API) and web scraping. Each approach presents unique challenges and benefits.

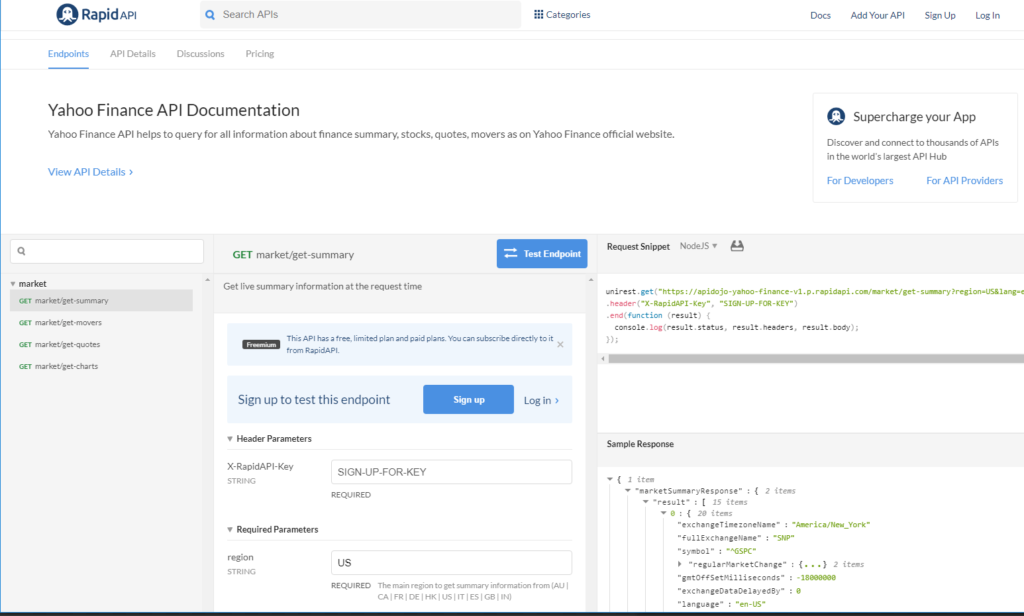

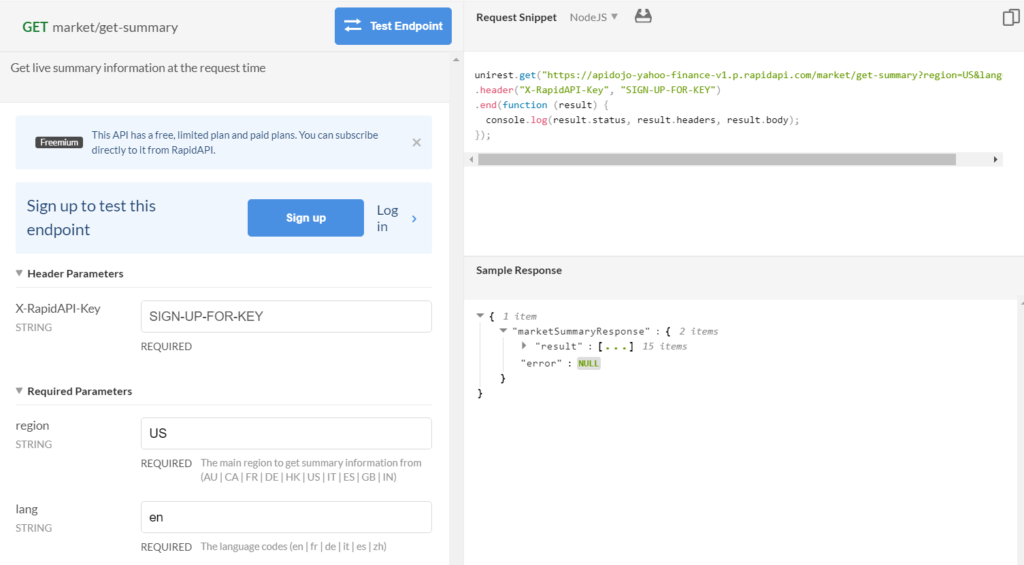

API Usage

While Yahoo Finance doesn’t offer a formal, documented API, several community-maintained libraries and projects attempt to interface with Yahoo’s data structures. These often rely on reverse-engineering Yahoo’s internal data retrieval mechanisms. This means the reliability of these unofficial APIs can be inconsistent. Changes to Yahoo Finance’s website structure can easily break these tools, requiring updates or even rendering them completely unusable. Furthermore, these unofficial APIs typically offer limited functionality compared to a formally supported API, often focusing on specific data types such as historical stock prices.

Web Scraping

Web scraping involves using software to automatically extract data from Yahoo Finance’s website. This method offers more flexibility, allowing access to a wider range of data than most unofficial APIs. However, it’s inherently more complex to implement. You need to understand HTML parsing and potentially deal with anti-scraping measures Yahoo might employ, such as rate limiting or CAPTCHAs. Furthermore, the structure of Yahoo Finance’s website can change, requiring adjustments to your scraping code. This fragility is a major drawback compared to a stable, officially supported API.

Data Access Reliability and Speed

Unofficial APIs, due to their dependence on Yahoo’s internal workings, are inherently less reliable than web scraping. Website changes can render them unusable overnight. Web scraping, while more robust to changes in data presentation, is slower due to the overhead of parsing HTML and navigating the website. Both methods are subject to Yahoo Finance’s server load and potential downtime. The speed of data retrieval in both cases is also influenced by factors such as network connectivity and the amount of data being requested.

Error Handling and Rate Limits

Both API usage and web scraping require robust error handling. For unofficial APIs, this includes handling connection errors, data parsing failures, and cases where the API returns unexpected results. Web scraping requires additional error handling for HTTP errors, anti-scraping mechanisms (like CAPTCHAs or rate limits), and variations in website structure. Handling rate limits is critical for both methods. This typically involves implementing delays between requests to avoid overwhelming Yahoo Finance’s servers. For example, you might introduce a random delay of between 1 and 5 seconds between each request to mimic human browsing behavior. Failing to respect rate limits can lead to temporary or permanent blocking of your IP address.

Exploring the Yahoo Finance API (If Available): How To Access Yahoo Finance News Articles Api

Unfortunately, Yahoo Finance doesn’t offer a publicly documented API specifically for accessing news articles. This is a common limitation with many financial data providers; they often prioritize providing data through their websites and proprietary tools rather than through open APIs. This decision is likely driven by a combination of factors, including data licensing, control over data presentation, and the potential for misuse or abuse of their data resources.

Absence of a Public Yahoo Finance News API, How to access yahoo finance news articles api

The lack of a readily available API for Yahoo Finance news articles stems from several factors. First, the news articles themselves are often aggregated from various sources, requiring complex licensing agreements and data handling. Second, Yahoo Finance might prioritize its own user experience and the presentation of news within its platform, potentially seeing an API as a disruption to that user flow. Finally, uncontrolled access to a large volume of news articles through an API could pose challenges in terms of rate limiting, data security, and maintaining the quality of the service.

Alternative Approaches to Accessing Yahoo Finance News Data

While a direct API is unavailable, there are alternative approaches to accessing Yahoo Finance news data. Web scraping is a common technique, although it’s crucial to respect Yahoo Finance’s terms of service and robots.txt file to avoid overloading their servers or violating their usage policies. Third-party libraries and tools might also offer functionalities to extract data from Yahoo Finance, though their reliability and long-term viability depend on the maintainers of those tools and the stability of Yahoo Finance’s website structure. It’s vital to approach these methods with caution and ethical considerations.

Hypothetical API Call Example and Flowchart

Let’s imagine a hypothetical Yahoo Finance API for news articles existed. The following example demonstrates how API calls might be structured using Python. Note that this code is purely illustrative and won’t function without a real API.

| Code | Description | Result (Hypothetical) |

|---|---|---|

import requests |

This code makes a GET request to a hypothetical API endpoint to retrieve news articles related to Apple (AAPL). It includes an authorization header, assuming API key authentication. | 'news': ['title': 'Apple Announces New Product', 'summary': '...', 'url': '...', 'date': '...', ...] |

Hypothetical API Data Retrieval Flowchart

A flowchart illustrating the steps involved in retrieving and processing news data from a hypothetical Yahoo Finance API would look like this:

[Description of Flowchart] The flowchart would begin with a “Start” node. It would then proceed to a “Request API Key” step, followed by a “Make API Request” step (specifying parameters like stock symbol and date range). Next, a “Check for Errors” step would handle potential API issues. Successful requests would proceed to a “Parse JSON Response” step, extracting relevant data. Finally, a “Store/Process Data” step would save the extracted news articles in a database or perform further analysis. The flowchart would conclude with an “End” node. The arrows connecting these steps would indicate the flow of data and control.

Data Processing and Handling

Extracting raw data from Yahoo Finance, whether through an API or web scraping, is only the first step. The subsequent processing and handling of this data are crucial for transforming it into a usable and insightful format. This involves cleaning the data, formatting it consistently, and storing it efficiently for later analysis. This section details the essential steps involved in this critical phase.

How to access yahoo finance news articles api – Efficient data processing hinges on a well-defined strategy encompassing data cleaning, formatting, and storage. The choice of storage method (database or CSV) depends on factors like data volume, query complexity, and analytical needs. Handling different data formats like JSON and XML requires specific parsing techniques to extract the relevant information.

Data Cleaning and Formatting

Data extracted from Yahoo Finance often contains inconsistencies and inaccuracies. Cleaning involves handling missing values, removing duplicates, and correcting erroneous entries. For instance, dates might be in various formats (MM/DD/YYYY, YYYY-MM-DD), requiring standardization. Similarly, numerical data might contain non-numeric characters, which need to be removed before analysis. Formatting ensures data uniformity; for example, converting all currency values to a common format (e.g., USD) and standardizing units of measurement. This step guarantees the reliability and comparability of the data for further processing and analysis. Consider using regular expressions for robust pattern matching and data manipulation during the cleaning process.

Data Storage and Management

Choosing the right storage method depends on your needs. For smaller datasets, CSV files offer a simple and readily accessible option. Libraries in many programming languages (like Python’s `csv` module) make writing and reading CSV files straightforward. However, for larger datasets or complex queries, a relational database (like PostgreSQL or MySQL) is far more efficient. A database allows for structured storage, efficient querying, and easy data management. Choosing a database requires consideration of scalability, data integrity, and the tools you are familiar with.

Handling Different Data Formats

Yahoo Finance data might come in JSON or XML formats. JSON (JavaScript Object Notation) is a lightweight text-based format that’s easy to parse using libraries like Python’s `json` module. XML (Extensible Markup Language), while more verbose, is also readily parsed using libraries like Python’s `xml.etree.ElementTree`. Parsing involves extracting specific data elements from the structured format. For example, in JSON, you would access nested objects and arrays using key names and array indices. In XML, you would traverse the XML tree to find specific elements and their attributes. Error handling is critical; for example, gracefully handling missing elements or malformed data is essential for robust data processing.

Data Flow Diagram

A simplified diagram illustrating the data flow would show a sequence of boxes and arrows. The first box would represent “Data Retrieval” from Yahoo Finance (either via API or web scraping). An arrow would then lead to a “Data Cleaning and Formatting” box, where data inconsistencies are addressed and the data is standardized. Another arrow would then connect to a “Data Storage” box, representing the chosen storage method (CSV file or database). Finally, an arrow from the “Data Storage” box could lead to a “Data Analysis” box, indicating the final use of the processed data.

Tim Redaksi